4 months of progress towards eliminating green screen from playoff contention.

Hi, I’m Kai. I’m an 18y/o filmmaker who’s using machine learning to help me make better movies.

I like to use visual effects in the short films I make (and publish on TikTok and YouTube). I frequently use green screen in my workflow..

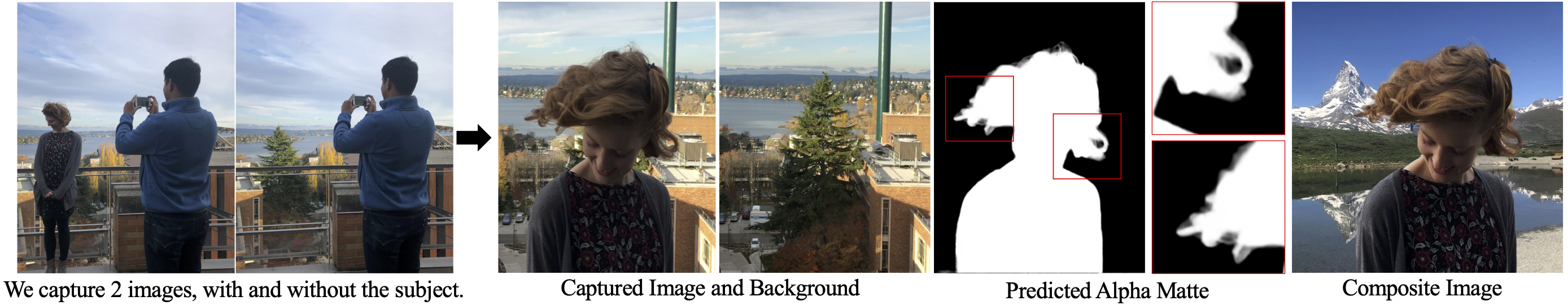

Green screen is extremely handy because it lets me “cut out” a foreground element (like a person) from the background. This process is known as keying. Then I can composite that foreground on top of any other background I want:

The problem is, green screen is a pain to work with. It takes up a lot of space, it must be wrinkle-free and evenly lit, and you’ve got to frame every shot so that the subject is always in front of it. Overall, not ideal. We can do better.

Doing better

This project started after I watched this video from the excellent channel Two Minute Papers on YouTube. The video covers a paper from the University of Washington which shows that deep learning can be used to do the job of green screen when there’s no green screen present.

Example inputs and outputs to the network created by Soumyadip Sengupta and his team.

Fascinated, I soon found an updated v2 of the original paper which could run in real-time. Both v1 and v2 seemed very promising, but they both had one major drawback – they couldn’t handle moving cameras. This was a bit of a dealbreaker for me, because I really like moving camera shots.

I decided I wanted to make my best effort to extend on the research I’d found. The only problem was that I didn’t know barely anything about machine learning.

I spent the next 11-ish weeks learning as much as I could about ML. I started with Andrew Ng’s iconic introductory ML course (thanks for the recommendation, HN!), and worked my way up to Coursera’s Deep Learning and GAN specializations.

After completing these, I began work on replicating the algorithm presented in the v2 paper from the UoW. Even though their code is open sourced on GitHub, I wanted to write my own implementation for 2 reasons–

A.) I knew I’d get a much deeper understanding of the problem if I did.

B.) it would be nice to legally own the copyright to the code I wrote.

After grappling with PyTorch errors for a few week and bumbling about without a clue what I was doing, I finally got my model to output something that wasn’t random noise:

This was about three weeks ago at the time of writing. Things have been moving quick since then.

Making the thing do the thing

From there, it was a big long process of iterating my network design many times, bringing it closer to Sengupta et. al.‘s architecture. Write a few lines of code, test, debug, repeat.

The training data that I give to Howard (yes, the neural network’s name is Howard. You can call him Howie if you prefer) consists of thousands of images that I create by compositing different green-screen clips of people onto random backgrounds, a form of data synthesis.

Howard’s goal is to produce what’s known as an alpha matte. An alpha matte is an image used to create the cut-out of the foreground. Wherever the matte is white, the original image is preserved, and wherever the matte is black, the image becomes transparent.

In order to train Howard, I compare his guesses for what he thinks the matte should look like against the ground-truth real matte (which I extracted from the green screen clip when I originally synthesized the data). Based on that comparison, Howard can make changes to himself in order to get better and better at guessing what the matte should be.

Here, you can see some outputs from an early version of Howard, who at the time was a very simplified version of the paper’s architecture. Notice how the mattes are blurry, indistinct, and don’t look anything like the silhouettes of the people in the training data:

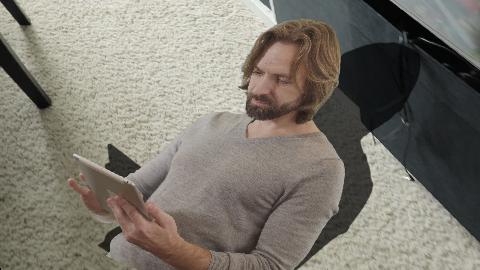

After a few weeks of tweaking and making Howard more complex (and closer to the paper), he started producing sharper outputs that were much more accurate. Here are some outputs he made a few days ago:

When I saw those outputs, I was ecstatic, because they prove that my idea is viable!

The really fun part about this whole process so far, though, has been the outputs Howard generates when I write the wrong code. At one point, I included too many batch normalization layers, which resulted in some interesting black and white detail soups:

Later on, I started requiring the network to generate a color foreground as part of its output, producing full RGB psychedelic trippiness in the early stages of training when Howard is still figuring out what he’s supposed to be doing. These are my personal favorites:

Conclusion

All in all, these past 4 months have been wild. I’ve gone from knowing almost nothing about machine learning to writing my own implementation of a research paper in PyTorch! One Ask HN post has changed my whole life.

I’ve thoroughly enjoyed the whole process, and I’m very much looking forward to the next couple weeks, because my gut tells me the network is really going to start taking shape soon. I’m on the home stretch, and I’m really looking forward to the day I get the whole darn thing to just work 100%. It’s gonna be epic.

Thanks for reading,

– Kai

P.S. I’m a part of the Pioneer tournament! Look out for my username, mkaic, on the global leaderboard :)